🌟 Editor's Note

Welcome to another exciting week in the Vision AI ecosystem! We've got a packed newsletter full of insights, events, and inspiring stories from the heart of innovation.

🗓️ Tool Spotlight

Focoos SDK offers a suite of RT-DETR–based object detection models optimized for speed and accuracy trade-offs. Trained on COCO (80 classes) and Objects365 (365 classes) datasets, they include variants from large to nano backbones.

The top-performing fai-rtdetr-l-coco model achieves 53.06 AP at 87 FPS, while lighter models reach up to 269 FPS with slightly reduced accuracy. The Objects365 model broadens coverage but at lower precision (~34.6 AP).

These models enable flexible deployment — from edge devices to cloud inference — and can be fine-tuned for custom datasets, making them versatile for real-time computer vision applications.[link]

🚀 Blog Spotlight

Machine Vision Triggering for Image Acquisition

Precise triggering is essential to high-speed machine vision — capturing images at the exact moment minimizes blur, false rejects and downtime.

Elementary ML’s recent guide explains how hardware triggers outperform software ones in production settings, outlines trigger sources (sensors, PLCs, encoders), covers signal integrity (TTL vs differential), and details camera I/O setup plus multi-camera synchronization.

It also offers practical troubleshooting for missed or double triggers and provides a checklist to ensure reliable, high-throughput image acquisition systems. (link)

🦄 Startup Spotlight

Visionify : AI-Powered Workplace safety

Visionify Inc. is a Denver-based startup specialising in AI-driven computer vision for workplace safety and compliance in industrial environments. Their flagship platform, VisionAI, connects with existing CCTV infrastructure to provide real-time monitoring, hazard detection (e.g., PPE violations, slips, falls, zone breaches), and automated alerts without added hardware.

They support cloud, edge, and on-premises deployment and have earned enterprise certifications such as SOC 2 Type II.

In 2025, they achieved ~$3.6 M revenue with a 33-person team, highlighting strong traction in the manufacturing, logistics and safety compliance market. (link)

🔥 Paper to Factory

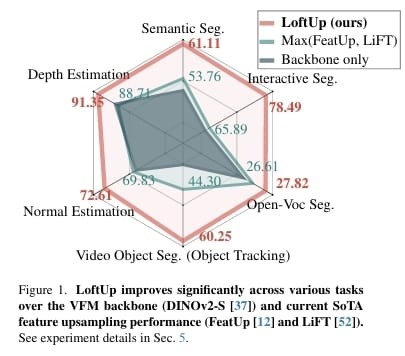

Here’s a ~75-word summary of the paper “LoftUp: Learning a Coordinate‑Based Feature Upsampler for Vision Foundation Models”:

The paper addresses how vision foundation models (VFMs) such as DINOv2 and CLIP suffer from low-resolution features which limit detailed tasks (like segmentation or fine-grained detection).

The authors propose a novel upsampler: a coordinate-based cross-attention transformer that takes high-res images plus low-res VFM features and outputs sharper high-quality features.

They pair this architecture with pseudo-ground-truth features (via masks + self-distillation) for training, and demonstrate significant improvement over existing upsampling methods. (link)

🏆 Community Spotlight:

Researchers from the ICCV 2025 discuss their latest work being highlighted in the conference

Intel’s latest video discusses on how to choose the right computer vision task for your AI project

Roboflow’s latest video discusses the process of creating the best in-class vision models for the edge

Reddit / X corner:

Till next time,