🌟 Editor's Note

Welcome to another exciting week in the Vision AI ecosystem! We've got a packed newsletter full of insights, events, and inspiring stories from the heart of innovation.

🗓️ Tool Spotlight

NVIDIA releases Cosmos Reason 2!

NVIDIA has launched Cosmos Reason 2, a major upgrade to its open reasoning vision-language model (VLM) designed for physical AI — giving robots and AI agents the ability to see, understand, plan, and act in the real world with human-like reasoning.

Cosmos Reason 2 improves spatio-temporal understanding, supports long-context inputs up to 256K tokens, and offers flexible deployment with 2B and 8B parameter models.

Key enhancements include 2D/3D object localization, trajectory data, OCR support, and timestamp precision.

Common use cases include video analytics, data annotation and critique, and robot planning and control — enabling developers to extract insights, automate dataset labeling, and generate step-by-step action plans for robots. [link]

Example of a sample prompt to generate detailed, time-stamped captions for a race car video.

🚀 Blog Spotlight

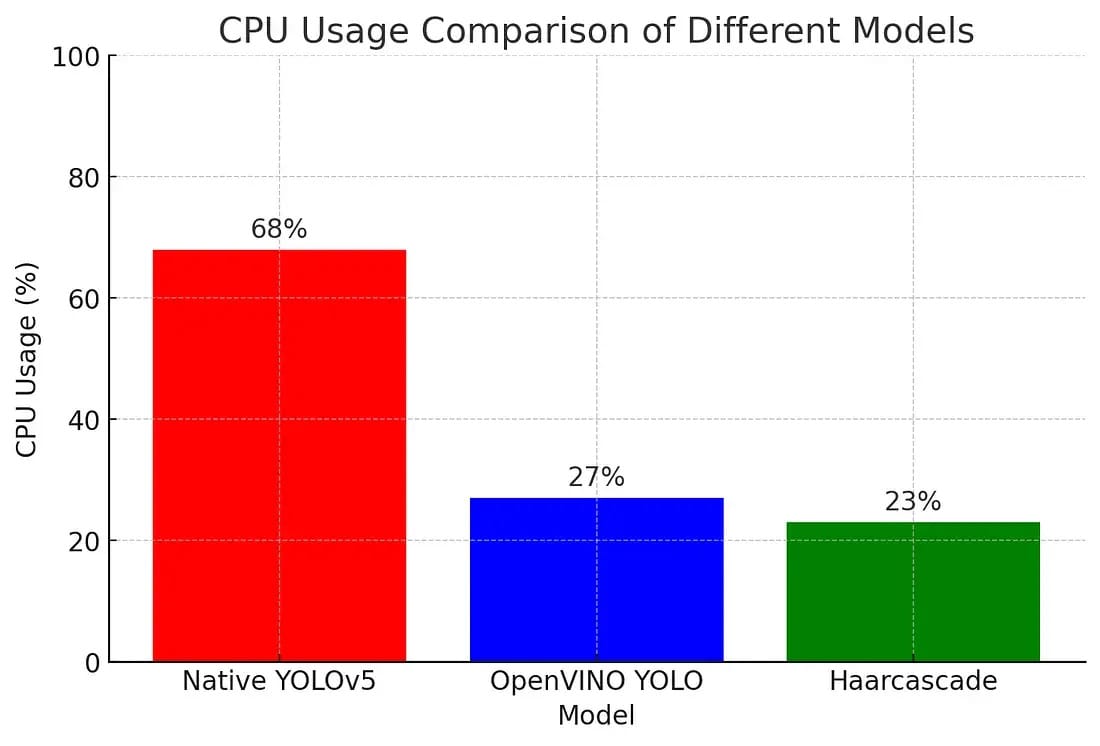

Optimizing YOLO with OpenVINO

In humanoid robot soccer, fast and efficient vision is crucial, but deep learning models like YOLO are too computationally heavy for on-board hardware, causing high latency and resource drain.

To address this, the author optimized YOLO using Intel’s OpenVINO toolkit on an Intel NUC platform. OpenVINO transforms models into an optimized intermediate representation and leverages CPU/GPU acceleration, dramatically reducing computation load.

This optimization cut CPU usage from 68 % to 27 % and boosted inference speed from ~50.6 ms to 17.5 ms per frame, enabling real-time object detection like ball tracking.

The result is a leaner vision pipeline that frees up processing for balance, planning, and other robot tasks — a significant win for real-time humanoid robotics. [link]

🦄 Startup Spotlight

DriveBuddyAI is an AI-powered driver and fleet safety startup that uses computer vision and advanced analytics to make commercial driving safer and more efficient.

The platform combines in-vehicle AI devices with a cloud dashboard to monitor driver behavior, detect risks like distraction or drowsiness, and provide real-time collision warnings and safety insights.

It helps fleet managers assess performance, reduce high-risk events, optimize operations, and support data-driven insurance decisions.

DriveBuddyAI’s technology aims to bridge the gap between current fleet safety systems and future autonomous mobility, fostering transparent decision-making across drivers, fleet owners, and insurers.

🔥 Paper to Factory

This paper introduces Quantile Rendering (Q-Render), a new method for efficiently handling high-dimensional features in 3D Gaussian Splatting, a popular scene representation used in computer vision for tasks like open-vocabulary segmentation.

Existing approaches compress features at the cost of quality. Q-Render instead sparsely samples only the most influential Gaussians along each viewing ray, maintaining feature fidelity while drastically cutting computation.

The authors also propose Gaussian Splatting Network (GS-Net), which predicts these features in a generalizable way.

On benchmarks like ScanNet and LeRF, the framework outperforms prior methods and enables real-time rendering with up to ~43.7× speedup on 512-dimensional features. Code will be released publicly. (link)

🏆 Community Spotlight:

Till next time,